As the autonomous vehicle (AV) industry marches toward commercial deployment at scale, the challenge isn’t just to create better algorithms to make this possible – we need better, smarter data, and vast amounts of it.

That’s what drives Gatik Arena™ – our in-house closed-loop sensor-accurate & physics-based simulation platform purpose-built to accelerate the development and deployment of autonomous freight – all while saving time, costs, and maximizing safety. Built in-house and fine-tuned to Gatik’s operational and technical needs, Gatik Arena™ pushes the frontier of safety-critical scenario generation, including precise control over ego vehicle trajectories, dynamic behavior of other road users, and full manipulation of environments – such as adversarial weather conditions, ambient lighting, and diverse terrain. In addition to rich realistic scenarios, Gatik Arena™ also generates rare but safety-critical edge cases that go far beyond what real-world driving data alone can offer.

Gatik Arena™ is purpose-built for freight-only autonomy to simulate complex logistics networks covering multiple markets and nuanced edge-case conditions with unmatched precision – enabling rapid scaling of safe, efficient, and cost-effective Freight-Only (Driverless) operations, without waiting for the real world to serve up perfect test cases.

In this blog, we cover the core technologies behind 3D reconstruction, a key component of Gatik Arena™.

3D Reconstruction

At the core of Gatik Arena™ is a high-fidelity 3D world model – or the “digital twin.” Using advanced 3D reconstruction, we create digital twins of real-world environments that enable repeatable, controlled, and scalable testing. A digital twin is a high fidelity virtual replica of the real world, designed to capture the geometry, texture, and dynamic behavior of roads, buildings, vehicles, pedestrians, and even weather patterns. For autonomous vehicles, this means modeling every drivable lane, curb, traffic signal, and subtle lighting variation that could influence sensor perception. Achieving this level of realism from raw sensor data is a complex challenge.

The first step in building a digital twin is reconstructing the world as seen through our sensors – cameras, lidars, and radars. This 3D reconstruction process produces the world model, which then serves as the basis for sensor simulation – generating synthetic but highly realistic camera, lidar, and radar outputs for testing. In recent years, 3D reconstruction from images have been driven by two groundbreaking technologies: Neural Radiance Fields (NeRFs) and 3D Gaussian Splatting (3DGS). We briefly present the core idea behind each of these methods.

Neural Radiance Fields

In this technique, we leverage the concept of radiance fields – arrays of light measurements defined at specific points and directions – to reconstruct scenes with high fidelity. In our NeRF pipeline, we train a neural network to estimate the color and density at each point and direction, giving us an approximation of the radiance. Because this requires querying the network at each point/direction pair, NeRF acts as an implicit representation of the scene.

Our NeRF pipeline runs in three stages. In the first stage, we capture multiple images of a scene from multiple viewpoints. From every pixel in each image, we shoot a ray in the direction of the pose of the camera (camera viewpoint) denoted by d. Points along this ray are sampled using our ray-marching strategy. The coordinates x, y, and z represent the 3D position of a point along this ray.

Our goal is to estimate the radiance at the sampled points. To this end, in the second stage, our neural network predicts the color (RGB values) and density at every such point, taking as input the position and direction of each point, <x, y, z, d>.

In the final stage, we perform volumetric rendering to compute color at every pixel in a 2D image captured from a specified (“novel”) camera viewpoint. This color is accumulated along the ray emanating from the pixel. To accurately render a 3D scene onto a 2D image, we remove points that are in empty space. This is achieved using the predicted density which provides us information about whether the ray hits an object at that point. The color provides information regarding the color at position x, y, z when viewed from direction d.

While NeRF produces highly photorealistic novel views, its implicit nature means rendering is computationally expensive – each pixel may require hundreds of network queries – making it challenging for real-time applications.

3D Gaussian Splatting offers an explicit alternative, storing the scene as a collection of Gaussian primitives. This representation enables much faster rendering by avoiding per-sample network queries, trading some memory efficiency for significant speed gains.

3D Gaussian Splatting

In this technique, instead of projecting a point into the 3D world and predicting its density and color, we project 3D Gaussians into the 2D world – a process known as splatting – and compare the projected color with the true image. This approach hence eliminates the need for ray marching, significantly boosting rendering speed. Our 3D Gaussian splatting pipeline consists of three stages: (1) generating a point cloud, (2) initializing a 3D Gaussian at each point, and (3) refining the Gaussians.

In the first stage, we use point clouds directly from the lidar sensors on our AV platform. In the second stage, we initialize a 3D Gaussian at each lidar point, placing its mean at the point’s (x, y, z) coordinates. Each 3D Gaussian is defined by its mean, opacity, covariance, and color.

In the final stage, we project the 3D Gaussians into 2D images first, using a technique known as “rasterization” (note that this is different from “ray marching”). For each pixel, the contributions of all 3D Gaussians whose projections overlap that pixel are accumulated, weighted by their depth and opacity. As 3D Gaussians are explicit representations, we do not need to sample points along the ray as the position of the 3D Gaussians are known beforehand. This makes rendering much faster than NeRFs.

We then optimize the parameters of the 3D Gaussians so that their 2D projections look similar to the 2D image. It is important to note that it is the 3D Gaussians that are optimized, not the 2D projected Gaussians. The original 2D images serve only as training data to optimize the 3D Gaussians.

While this method gives us the speed advantages we need for real-time AV simulation, it comes with a trade-off: storing explicit 3D Gaussians requires significant memory for all their parameters.

Below are some reconstructions generated on data from Gatik’s autonomous trucks using such 3D reconstruction methods. Note that once we generate high-fidelity 3D reconstructions, we can synthesize multiple novel perspectives of manipulated ego and actors to simulate different scenarios.

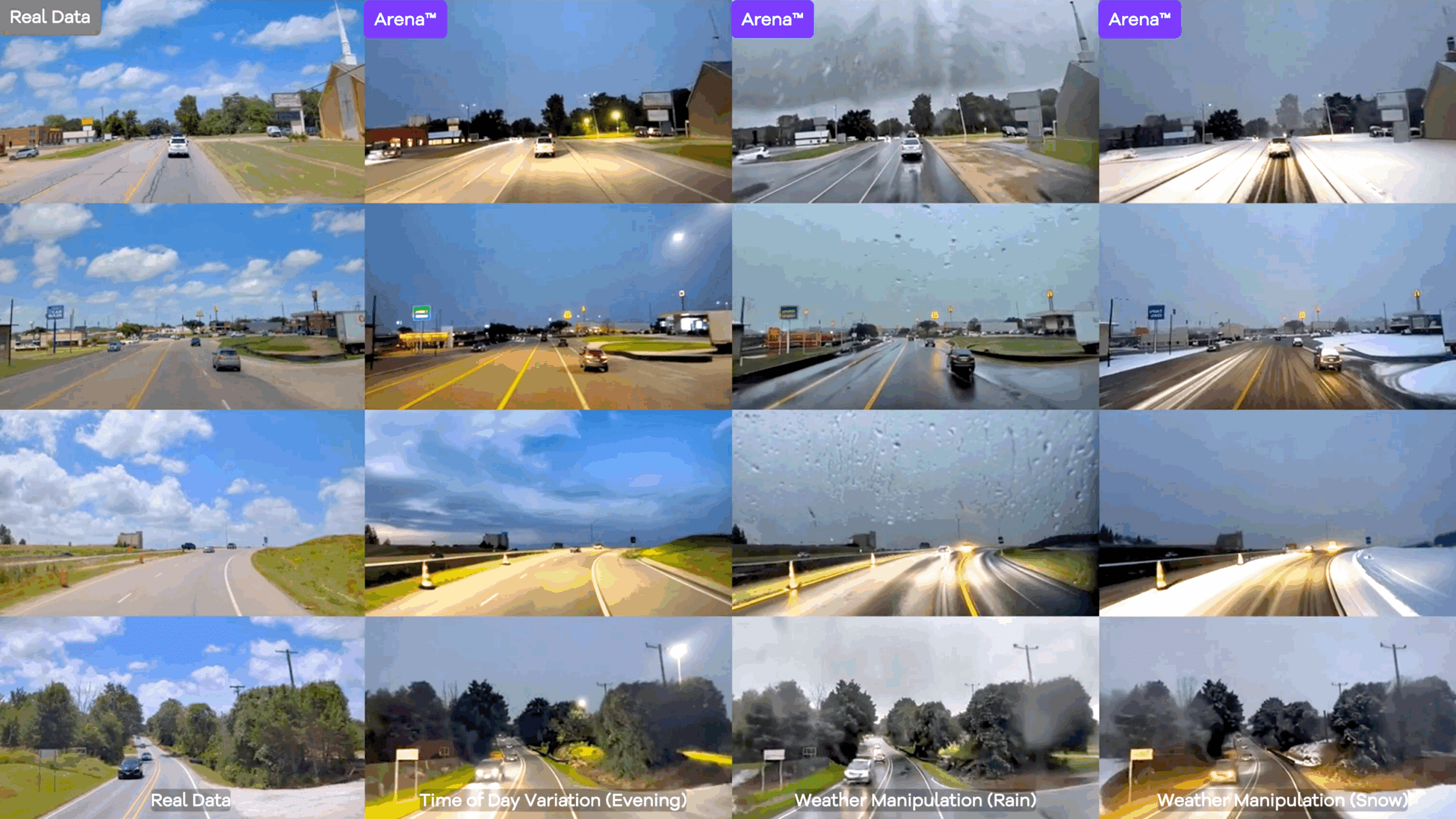

Weather, Lighting, and Geographic Variations

A critical element of building a digital twin is simulating dynamic realism – light and shadow throughout the day, varied weather effects, and evolving road and environmental conditions. Recent advances in conditional diffusion and world model architectures have unlocked powerful video generation capabilities tailored for autonomous driving and robotics use-cases – which can generate high-fidelity, multi-view driving scenarios conditioned on vehicle dynamics and traffic context.

To develop Gatik Arena™, we integrated NVIDIA Cosmos world foundation models (WFMs). Cosmos provides a suite of pretrained generative world models, safety guardrails, and optimized data pipelines for physical AI applications like AV simulation. This integration enables us to recreate every rain-slicked intersection, jaywalking pedestrian, or sensor-degraded scenario with high physical and visual fidelity – while also introducing geographic variations, such as changes in terrain and evolving environments across different regions.

With full control over variables such as traffic flow, lighting, weather, and sensor noise, our engineers can spin up complex, high-stakes simulations in parallel – allowing faster iteration without ever putting a truck on public roads until it’s mission-ready.

Closed Loop Simulation

Gatik Arena™ integrates with Gatik Driver – our purpose-built autonomous driving stack – to deliver closed-loop simulation that goes far beyond software-only testing. Every hardware element of the AV platform, from sensors and compute to vehicle control systems, is modeled with high fidelity, ensuring that the simulated performance mirrors real-world behavior under equivalent conditions.

This realism allows us to precisely replicate the physical characteristics of our hardware, the complexities of sensor perception, and the dynamics of our truck platforms. As a result, Gatik Driver responds to simulated inputs exactly as it would on the road – whether interpreting lidar returns on a foggy morning, managing compute load during dense urban operations, or executing precise steering and braking maneuvers. By emulating both the external environment and internal vehicle systems with high accuracy, Gatik Arena™ can replace a significant portion of on-road testing, running thousands of realistic, repeatable scenarios across varied weather, lighting, terrain, and sensor-degradation – before the truck even starts operating on the public roads.

Powering the Scale-Up of Driverless Freight Networks

Gatik Arena™ elevates simulation from a proving ground to a scaling platform. By combining hardware-accurate modeling, sensor-faithful realism, and full-environment control, Gatik Arena™ allows us to accelerate development, validation, and optimization of Gatik Driver across diverse operating domains without the bottlenecks of on-road testing.

This capability means we can qualify new routes, vehicle platforms, and environmental conditions in parallel, dramatically reducing the time from development to driverless operations. Gatik Arena™ is not just part of our toolchain – it’s the scaling engine behind our Freight-Only strategy, enabling us to expand safely, efficiently, and at unprecedented speeds.

About Gatik

Gatik AI Inc. develops AI-powered autonomous trucking solutions for regional logistics networks. The Gatik Driver™ is a scalable, interpretable AI system purpose-built to enable safe, consistent, and high-frequency freight movement. Proven in real-world driverless operations for Fortune 50 customers, Gatik’s technology enhances the reliability and cost efficiency of B2B supply chains at scale.

Founded in 2017, Gatik's driverless trucks are commercially deployed on public roads across multiple markets, including Texas, Arkansas, Arizona, Nebraska, and Ontario. Supported by close collaborations with strategic partners - including Isuzu Motors Limited, NVIDIA, and Ryder - Gatik is advancing reliable and efficient freight operations at scale.

Gatik, the Gatik logo, and Gatik Driver are trademarks or registered trademarks of Gatik AI Inc. Isuzu and the Isuzu logo are trademarks or registered trademarks of Isuzu Motors Limited. All other company and product names may be trademarks of the respective companies with which they are associated.

Copyright © 2026 Gatik AI Inc. This content may be reproduced or quoted for editorial purposes with proper attribution to Gatik. All other rights reserved.

Safe Harbor Statement

This press release contains forward-looking statements within the meaning of applicable securities laws, including, but not limited to, statements regarding Gatik’s business strategy, commercial deployment at scale, and anticipated technological performance. These statements are based on current expectations and involve inherent risks and uncertainties. Actual results may differ materially due to factors including, but not limited to: the safety and reliability of autonomous driving technology; shifts in the regulatory landscape for AI and autonomous vehicles; cybersecurity threats and data privacy compliance; global supply chain disruptions; and changes in market demand for middle-mile logistics. Gatik undertakes no obligation to update these statements to reflect events or circumstances after the date of this release, except as required by law. All forward-looking statements are qualified in their entirety by this cautionary statement.